In the bustling world of customer support, artificial intelligence (AI) has emerged as a transformative force. Call centers, often the first line of defense in customer service, are increasingly turning to AI in quality assurance to enhance the customer experience (CX). While the potential benefits are immense, including increased efficiency and personalized service, the road to AI integration is fraught with challenges. In a recent webinar, experts discussed how businesses can effectively leverage AI call center software technologies in call centers, navigate the common pitfalls of proprietary language models, and ultimately transform their customer interactions for the better.

This blog explores how businesses can strategically approach contact center AI solutions and avoid the traps that could hinder their progress. By adopting this strategic approach, companies can not only avoid common mistakes but also set the stage for a future where technology and human ingenuity combine to create exceptional customer experiences.

AI Trends & Impacts of Rapid Advancement on CX

Artificial intelligence, particularly in the realm of large language models (LLMs) like OpenAI's GPT series, is evolving at an unprecedented pace. Each new version brings substantial enhancements in performance, demonstrating a remarkable ability to understand and respond to complex customer queries. The shift from GPT-3.5 to the more recent GPT-4, for example, represents a significant leap forward, offering refined capabilities that are becoming indispensable in customer service.

As these technologies advance, the cost to implement them is dramatically decreasing. This change is making powerful AI tools accessible to a broader range of businesses, transforming customer service landscapes. Small to mid-sized enterprises can now leverage sophisticated AI solutions that were once only feasible for large corporations, leveling the playing field and expanding opportunities for innovation in customer engagement.

While the integration of AI into customer service channels promises enhanced efficiency and improved customer experiences, it also brings challenges. Rapid adoption without adequate safeguards can lead to errors that may tarnish a brand's reputation. For example, inaccuracies from chatbots—due to insufficient testing or calibration—have sometimes led to customer dissatisfaction, highlighting the importance of cautious and thoughtful AI deployment.

With the increasing complexity of AI-driven interactions, ensuring the accuracy of AI outputs is more crucial than ever. Implementing rigorous testing protocols and maintaining oversight are essential to prevent potential errors from affecting customer interactions. Companies must prioritize these practices to safeguard the integrity of their customer service operations, ensuring that AI tools enhance rather than compromise their service quality.

Don’t Be Fooled by the Allure of Proprietary Models

For many organizations, developing proprietary AI models in-house is an attractive idea, especially when they possess unique data specific to their domain. The allure lies in the promise of specialized solutions tailored to address distinct challenges. However, significant challenges come with developing these models that often outweigh the potential benefits.

Firstly, proprietary models require substantial investment, not just in financial resources but also in terms of time, infrastructure, and technical expertise. Building such a system is a lengthy process, often spanning many months, with continuous retraining needed to ensure it remains up-to-date. Furthermore, because these models are built for niche applications, they tend to lack the versatility and adaptability required to tackle new challenges, limiting their long-term utility.

General-purpose models, like OpenAI's GPT-4, have evolved rapidly, often surpassing proprietary models in both adaptability and performance. The speed of development in general AI is making it increasingly difficult for specialized models to keep pace.

Real World Example

Bloomberg’s financial-specific Large Language Model (LLM), Bloomberg GPT, serves as a poignant example of the limitations of proprietary AI. Developed at a cost of $10 million and trained on Bloomberg's unique financial data, the model initially outperformed GPT-3.5, demonstrating the potential of domain-specific models. However, within just six months, OpenAI released GPT-4, which comfortably outperformed Bloomberg GPT across all financial tasks. Bloomberg's considerable investment quickly became obsolete, highlighting the unpredictability of advancements in the AI field.

In a recent webinar, MaestroQA’s CEO Vasu Prathpati discussed the Bloomberg case. Vasu elaborated on the inherent risks associated with investing heavily in proprietary AI models. He suggests that such ventures can often feel like stepping into an inevitable defeat. Reflecting on the Bloomberg case, he points out that despite their substantial $10 million investment to develop a specialized financial model, the rapid advancement of general-purpose models like GPT-4 quickly overshadowed their efforts. This rapid obsolescence not only undermined the initial success but also rendered the significant financial investment ineffective, illustrating a broader industry trend where the speed of AI development can nullify even the most focused and well-funded proprietary projects.

His point clearly illustrates the risks organizations face when relying solely on proprietary models. As AI technology continues to evolve rapidly, the unpredictability of these advancements makes investing in proprietary systems a challenging strategy that often leaves organizations walking into a losing battle.

Pitfalls of Proprietary Models

While proprietary models can seem appealing for organizations with domain-specific data, they come with notable challenges that hinder their widespread utility. From their limited adaptability to the significant investment required and data demands, these models can struggle to deliver accurate results despite substantial investments. Let's explore these pitfalls in detail.

- Narrow vs. Broad Capabilities: Proprietary models are often highly specialized and designed for specific tasks. This limits their versatility, hindering their ability to adapt to emerging challenges. Due to their reliance on limited training data, these models often require extensive time to adjust to new scenarios, hindering their flexibility and broader utility. In contrast, general-purpose models like GPT-4 have broader applicability and can be fine-tuned to different use cases.

- Significant Investment Requirements: Developing proprietary models demands considerable financial resources, time, and technical expertise. The training process for these models is inherently time-consuming, often spanning several months and necessitating multiple iterations for refinement. Prospective customers deploying competitors' proprietary models often struggle to achieve accurate results due to insufficient training data and the extended time needed for training. These models also require ongoing maintenance to stay current with the latest domain-specific data, adding to the operational overhead.

- Data Requirements: Proprietary models require vast amounts of high-quality domain-specific data. Gathering and annotating this data is a labor-intensive process. Even with such data, the models can still fall short in accuracy.

- Lack of Accuracy: Despite heavy investments in their development, proprietary models frequently struggle with accuracy. Their performance is often hindered by the limited scope and quantity of training data available. For instance, in a notable case, Air Canada's chatbot provided incorrect information to a traveler, leading to a legal ruling that held the airline accountable for the chatbot's advice. This incident underscores the importance of accuracy and the potential consequences of errors in proprietary AI systems.

In contrast, general-purpose models like GPT-4, supported by vast datasets and significant funding, typically offer more robust and accurate performances. Their extensive training across a wide range of data not only enhances their accuracy but also their adaptability to various tasks, making them more reliable and versatile than many proprietary alternatives.

The pitfalls of proprietary models highlight their significant limitations in comparison to general-purpose AI systems. Their narrow capabilities, high investment requirements, demanding data needs, and accuracy challenges make them less adaptable and effective. By recognizing these challenges, organizations can make more informed decisions and lean toward solutions that better align with their strategic goals and customer service needs.

MaestroQA’s Approach

In the evolving landscape of AI for customer experience, MaestroQA offers a solution that balances precision, adaptability, and compliance. By combining general-purpose AI models with domain-specific data, prompt engineering, and human oversight, MaestroQA provides a robust framework that meets diverse organizational needs while minimizing risks. Let's delve into how MaestroQA’s Playground Approach harnesses these elements for optimal performance.

The Playground Approach

MaestroQA’s Playground Approach is grounded in the principle that prompt engineering and retrieval-augmented generation (RAG) are key to maximizing the potential of general-purpose AI models. Instead of constructing an LLM from the ground up, this approach leverages existing models while incorporating specific prompts and domain-specific data to provide accurate, context-aware answers.

Sophisticated prompt engineering is used to guide foundational models with detailed contextual examples. For instance, in customer service, the model is fed unique, customer-specific scenarios and user queries to tailor responses effectively. Retrieval-augmented generation (RAG) takes this a step further by querying internal databases to find relevant data and augmenting it with general-purpose models to generate informed answers. The result is more precise, personalized, and relevant information for customers.

Flexibility and Experimentation

MaestroQA thrives on flexibility and experimentation, empowering organizations to explore and experiment with leading AI models to identify trends, compliance gaps, and coaching opportunities specific to their needs. By keeping up with the latest AI advancements,

MaestroQA remains adaptable, transparent, and future-proof, ensuring clients access the best tools while staying cost-efficient.

Human-in-the-Loop Framework

A robust Human-in-the-Loop framework serves as a safety net to validate responses generated by AI. This human oversight ensures alignment with the organization’s objectives, mitigating potential legal liabilities. By incorporating internal teams or external experts for validation, MaestroQA reduces the risks of AI hallucinations, enabling trustworthy, accurate responses while maintaining compliance.

In short, MaestroQA’s unique approach combines the strengths of general-purpose models with the adaptability of prompt engineering and retrieval-augmented generation to deliver reliable, scalable, and transparent AI solutions that align perfectly with organizational goals.

AI-Driven Insights in Action

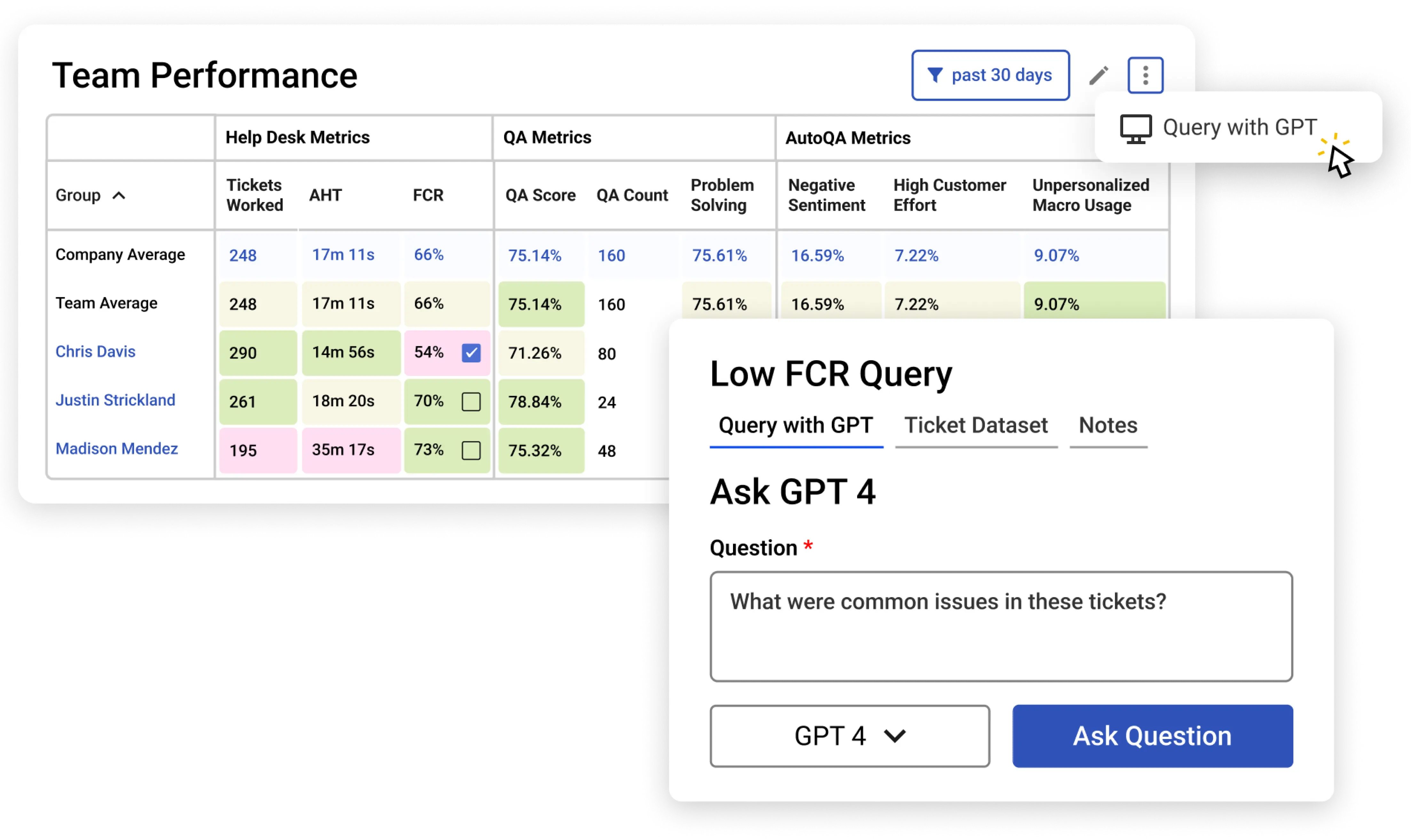

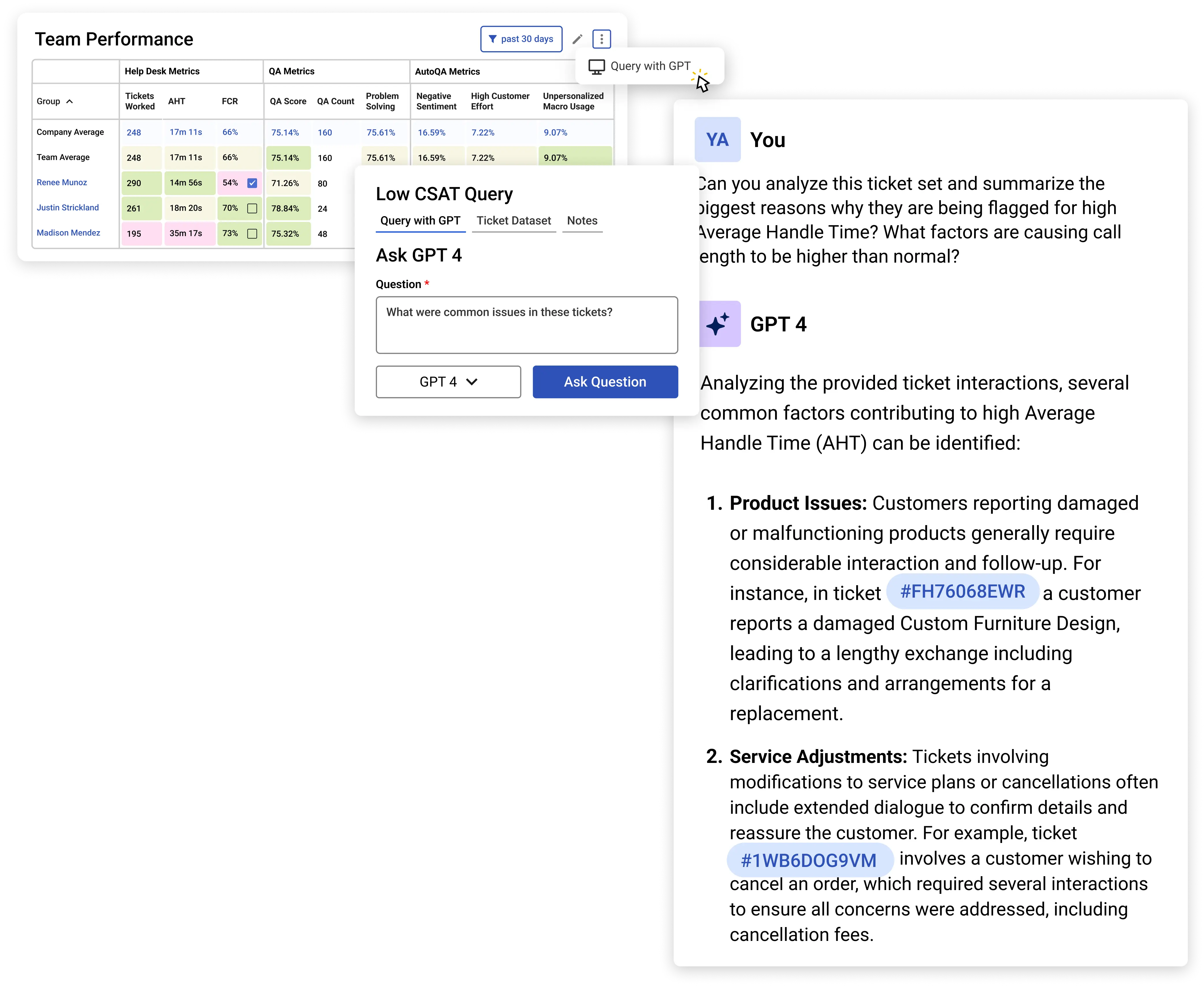

During our recent webinar demonstration, we highlighted the transformative potential of MaestroQA’s AI-driven tools. Our approach leverages cutting-edge GPT AI technology to deliver unparalleled insights and streamline operational efficiency.

The journey begins with our robust Performance Dashboard, seamlessly integrating advanced AI models like ChatGPT, Claude, and others. This dashboard serves as the first step in identifying hotspots related to metrics tailored to business needs. In this demo, that metric was DSAT.

From this starting point, customer service teams can drill down into specific subsets of related tickets to conduct root cause analysis (RCA). Here, you can then use MaestroQA's sophisticated GPT-powered prompting to generate detailed trends and insights that might otherwise remain obscured. These prompts allow teams to conduct nuanced analysis, transforming broad performance metrics into actionable intelligence that enhances both compliance and coaching opportunities.

For instance, in the demo, we demonstrated how identifying DSAT hotspots through our dashboard allows teams to zero in on underlying issues and utilize AI-enhanced prompts to uncover the root causes of dissatisfaction. This information is invaluable in refining customer service strategies and delivering tailored solutions that improve customer interactions.

Our playground-style approach empowers users to combine their proprietary data with leading AI models to create highly adaptive, transparent solutions. Retrieval-augmented generation enables organizations to leverage their internal databases alongside large language models, providing accurate and context-rich answers. This methodology enhances the quality of customer responses, ensuring seamless integration between MaestroQA’s data analysis tools and user interactions.

MaestroQA’s platform transforms the way organizations manage AI in customer support, offering sophisticated AI-powered insights that help teams move from surface-level observations to strategic, actionable plans.

Bringing AI Innovation to Customer Service

MaestroQA’s platform demonstrates how cutting-edge AI technology can transform customer service by offering comprehensive, data-driven insights. With GPT-powered analysis and adaptable AI models, customer service teams can explore customer interactions in-depth, identify improvement opportunities, and develop strategies that align with their objectives.

The platform's seamless integration with advanced models like GPT-4 and Claude ensures adaptability to evolving customer needs. MaestroQA empowers teams to analyze customer sentiment, spot DSAT trends, and validate results with human expertise, ensuring accurate and valuable insights.

Next Steps?

Are you ready to transform your customer service approach with MaestroQA's AI-driven insights? Dive into our comprehensive suite of tools and experience how integrating state-of-the-art models like GPT-4 can uncover new dimensions of customer satisfaction, root cause analysis, and team performance.

See how MaestroQA’s prompt engineering, flexible AI models, and human-in-the-loop validation can deliver deeper customer insights while enhancing your operational efficiency. Empower your team to excel with data-backed strategies tailored to your organization's specific needs.

.jpg)

.jpeg)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.avif)

.avif)

%2520(1)-p-800.avif)

.avif)

-p-500.avif)

%2520(1).avif)

.avif)

.avif)

%2520(1).avif)

.avif)

%2520(1).avif)

%2520(1).avif)

%2520(1).avif)

.avif)

%2520(1).avif)

%2520(1).avif)

.avif)

%2520(1).avif)

.avif)

.webp)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.avif)

.jpeg)

.jpeg)

.jpeg)

.avif)

.avif)

.avif)

.jpeg)

.jpeg)

.jpeg)